After AI Went Mainstream: What Actually Started Breaking in 2026

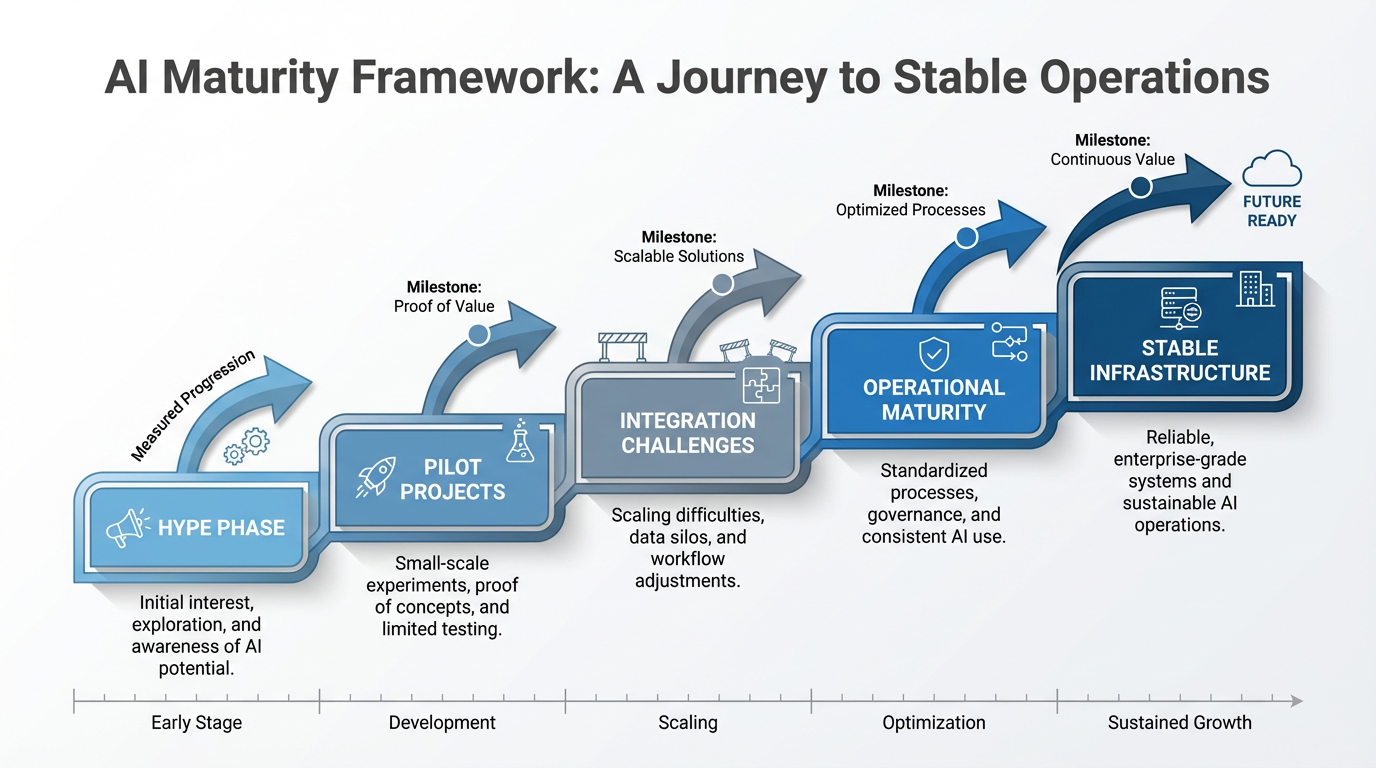

Once AI became ordinary, its weaknesses became impossible to ignore. This analysis examines the friction points, accountability gaps, and integration challenges that emerged after AI's mainstream adoption—and why the next phase will be defined by restraint rather than expansion.

After AI Went Mainstream: What Actually Started Breaking in 2026

Why normalization exposed the real limits of artificial intelligence

In 2025, artificial intelligence crossed a quiet but important threshold. It stopped being experimental and became ordinary.

As explored in our recent analysis, 2025 was the year AI went mainstream, embedding itself into enterprise workflows, public services, and everyday tools. That transition marked progress—but it also revealed something else.

Once AI became normal, its weaknesses became impossible to ignore.

When AI Stops Being Impressive

During the early hype cycle, AI systems were judged by what they could do in isolation: generate text, write code, recognize images. In real-world deployment, those capabilities were rarely the problem.

The friction appeared elsewhere—context, accountability, and reliability. Industry observers noted that many AI deployments struggled not because models failed, but because organizations underestimated the complexity of integrating them into existing workflows (Harvard Business Review).

Mainstream adoption removed the novelty. What remained was reality.

The Integration Problem No One Wanted to Talk About

As organizations scaled AI usage, it became clear that the hardest challenges had little to do with the technology itself. Data pipelines were fragmented. Internal documentation was outdated. Decision authority was unclear.

AI did not create these problems—it exposed them. This pattern mirrors previous enterprise technology shifts, where tools matured faster than the institutions using them (McKinsey & Company).

Automation Without Ownership

One of the most common failures that surfaced after AI went mainstream was a lack of ownership. AI-generated outputs were often treated as neutral or self-validating. When results were wrong, responsibility was unclear.

Research into enterprise AI adoption shows that unclear governance significantly increases operational risk and slows decision-making (World Economic Forum).

This problem rarely appears in pilot projects. It emerges only after AI becomes routine—when speed matters more than experimentation.

Why Normalization Changed the Conversation

Once AI became ordinary, organizations stopped asking what it could do and started asking where it introduced friction, delay, or risk. Evaluation shifted from capability to dependability.

This transition follows a familiar pattern seen in other infrastructure technologies. When computing, electricity, or the internet became commonplace, their failures mattered more than their promise.

AI reached that stage in 2025.

What Comes After the Mainstream Moment

The next phase of AI adoption will be defined less by expansion and more by restraint. Organizations are already reducing tool sprawl and focusing on systems that integrate cleanly, fail predictably, and align with human judgment (Gartner).

The most successful AI implementations will not be the most advanced. They will be the most boring—and the most reliable.

Looking Back to Move Forward

Understanding what started breaking after AI went mainstream requires understanding how it got there. For context, readers may find it useful to review:

2025: The Year AI Went Mainstream — A Complete Year in Review

Normalization changed the conversation. What comes next will determine whether AI matures into dependable infrastructure or remains a source of friction.

Sources & References

- Harvard Business Review. Why AI Projects Fail. https://hbr.org/2024/10/why-ai-projects-fail

- McKinsey & Company. The Real Challenge of AI Transformation. https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-real-challenge-of-ai-transformation

- World Economic Forum. AI Governance and Enterprise Risk. https://www.weforum.org/stories/2024/11/ai-governance-enterprise-risk/

- Gartner. AI Trends Beyond the Hype. https://www.gartner.com/en/articles/ai-trends-beyond-the-hype

Published by Vintage Voice News

Sources & References

Disclaimer: This analysis is for informational purposes only and does not constitute investment advice. Markets and competitive dynamics can change rapidly in the technology sector. Taggart is not a licensed financial advisor and does not claim to provide professional financial guidance. Readers should conduct their own research and consult with qualified financial professionals before making investment decisions.

Taggart Buie

Writer, Analyst, and Researcher