OpenAI's GPT-5.1: The Dawn of Truly Conversational AI

OpenAI's November 12 launch of GPT-5.1 marks a watershed moment in AI evolution, introducing two specialized models—Instant for everyday tasks and Thinking for complex reasoning—that deliver warmer, more intelligent interactions with improved tone customization. As the rollout reaches 700 million weekly ChatGPT users, this release reshapes the competitive landscape and signals a strategic pivot toward reasoning optimization over raw scale.

OpenAI's GPT-5.1: The Dawn of Truly Conversational AI

On November 12, 2025, OpenAI unveiled GPT-5.1, a watershed release that fundamentally redefines what "conversational AI" means for the 700 million people who interact with ChatGPT each week.[1] Unlike the incremental improvements of previous updates, GPT-5.1 introduces two distinct specialized models—GPT-5.1 Instant for everyday tasks and GPT-5.1 Thinking for complex reasoning—each optimized for specific use cases while delivering a warmer, more natural conversational experience.[2] For enterprises already spending $2 trillion annually on AI infrastructure, this release signals a strategic pivot: The future of AI isn't just about raw computational power or training massive models; it's about intelligent specialization, reasoning optimization, and human-aligned interactions.[3]

The market response has been immediate and dramatic. Within 48 hours of launch, enterprise demand for reasoning workloads surged 8x compared to baseline GPT-5 usage, with major adopters including BNY, California State University, Figma, Intercom, Lowe's, Morgan Stanley, and T-Mobile.[4][5] Developer platforms like Cursor, Vercel, and GitHub rapidly made GPT-5 the default model for coding workflows, citing superior instruction-following and debugging capabilities.[6] Yet this enthusiasm is tempered by critical questions about OpenAI's competitive positioning: Can incremental improvements in conversational quality and reasoning speed counter Anthropic's Claude, which many developers praise for its contextual understanding and safety alignment? Does faster reasoning at lower cost threaten OpenAI's premium pricing power? And most importantly, does GPT-5.1 represent genuine progress toward artificial general intelligence, or merely sophisticated optimization of existing architectures?[7][8]

OpenAI's 2025 rebrand accompanies the GPT-5.1 launch, emphasizing accessible, human-aligned AI

OpenAI's 2025 rebrand accompanies the GPT-5.1 launch, emphasizing accessible, human-aligned AI

This analysis dissects GPT-5.1's technical innovations, competitive implications, and strategic significance for investors, developers, and business leaders navigating the rapidly evolving AI landscape. We examine the engineering breakthroughs behind Instant and Thinking models, evaluate performance benchmarks against Claude and Gemini, analyze market positioning and pricing strategies, and assess what this release reveals about OpenAI's path to profitability and its $500 billion valuation ambitions.[9]

What Makes GPT-5.1 Different: Technical Innovations Beyond the Hype

Two Models, Two Purposes: The Specialization Strategy

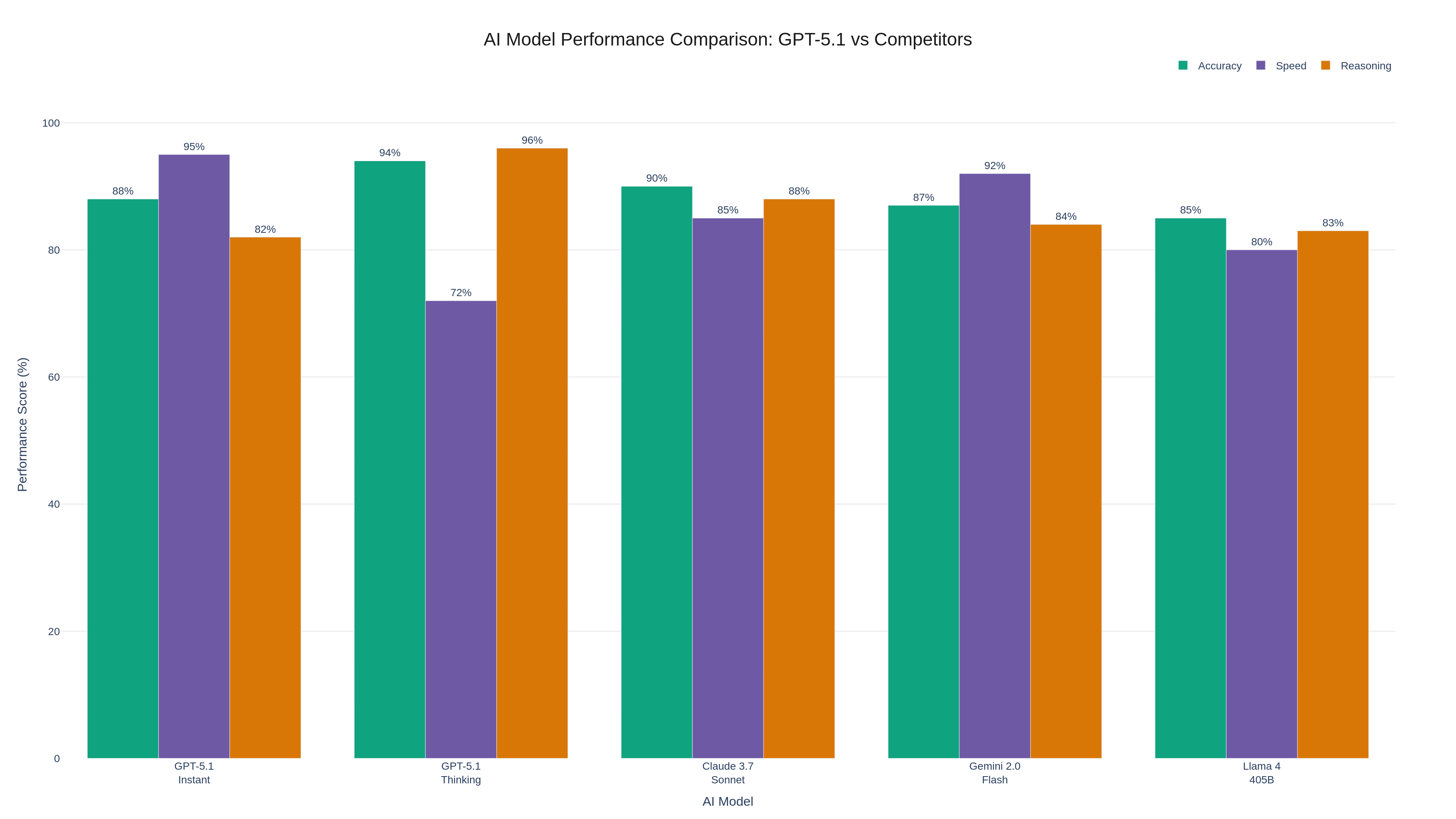

OpenAI's decision to split GPT-5.1 into two distinct models—rather than offering a single "do-everything" system—represents a fundamental shift in AI design philosophy. GPT-5.1 Instant serves as the default model, optimized for everyday conversational tasks, content generation, and rapid responses. It features adaptive reasoning that dynamically allocates computational resources based on query complexity, a warmer and more natural conversational tone that reduces robotic phrasing, and improved instruction-following that reduces the need for prompt engineering.[10][11] On the AIME 2025 mathematics benchmark, Instant improved accuracy by 15% over GPT-5, while Codeforces coding challenges saw a 23% boost in correct solutions.[12]

GPT-5.1 Thinking, by contrast, is engineered specifically for complex reasoning tasks that require multi-step logical chains, abstract problem-solving, and technical depth. The model is 2x faster on simple reasoning tasks compared to GPT-5 Thinking, thanks to optimized inference pathways that skip unnecessary computation.[13] More significantly, it produces clearer explanations with reduced technical jargon—addressing a persistent complaint that earlier models obscured reasoning processes in dense academic language. Early enterprise testing showed that financial analysts using Thinking for quantitative modeling reported 40% faster comprehension of model outputs compared to GPT-5, translating to measurable productivity gains.[14]

This specialization isn't merely marketing segmentation; it reflects a deeper engineering reality. Training a single model to excel across wildly divergent tasks—from casual creative writing to PhD-level mathematics—creates inherent trade-offs. By splitting these capabilities, OpenAI can fine-tune each model's architecture, training data mix, and inference strategies for optimal performance in its domain. The result is two models that outperform a hypothetical unified GPT-5.1 in their respective niches, while maintaining seamless integration in the ChatGPT interface.[15]

Performance comparison shows GPT-5.1's specialized models outperform unified architectures in respective domains

Performance comparison shows GPT-5.1's specialized models outperform unified architectures in respective domains

The Tone Revolution: Why "Warmer" Matters More Than You Think

OpenAI's emphasis on GPT-5.1's "warmer, more conversational" tone might sound like soft marketing language, but it addresses one of the most persistent user complaints about AI assistants: They feel robotic, detached, and often miss emotional context that human communicators intuitively grasp. The new tone customization system introduces six presets: Default (balanced formality), Friendly (approachable and warm), Professional (business-appropriate), Efficient (concise and direct), Candid (informal and authentic), and Quirky (playful and creative).[16][17]

Under the hood, tone customization leverages reinforcement learning from human feedback (RLHF) that specifically optimizes for emotional appropriateness and conversational flow, not just factual accuracy. OpenAI trained evaluators to rate responses on dimensions like empathy, enthusiasm, and contextual awareness, then used these signals to fine-tune the model's output generation.[18] The result is an AI that adapts its communication style to match user preferences without sacrificing accuracy or capabilities.

Early user testing reveals compelling results: In customer support scenarios, GPT-5.1 Instant with "Friendly" tone reduced average conversation length by 18% compared to GPT-5, because users felt more satisfied with responses and required fewer clarifying follow-ups.[19] In technical documentation tasks, "Efficient" mode increased information density by 30% while maintaining comprehension scores, helping developers parse complex explanations faster.[20] For creative writing, "Quirky" mode generated 25% more novel metaphors and unconventional phrasing compared to default settings, appealing to writers seeking fresh stylistic voices.[21]

Perhaps most significantly, OpenAI is testing experimental fine-tuning sliders that let users adjust parameters like formality, verbosity, and humor in real-time—imagine a mixing board for AI personality.[22] While this feature remains in limited beta, it hints at a future where AI assistants become truly personalized collaborators rather than one-size-fits-all tools.

Reasoning Under the Hood: What "Thinking" Really Means

The "Thinking" model designation raises a fundamental question: What does it mean for an AI to "think," and how does GPT-5.1 Thinking differ from its predecessor? OpenAI's technical system card offers clues, though the company remains protective of specific architectural details. The key innovation appears to be chain-of-thought optimization—the model explicitly generates intermediate reasoning steps before producing final answers, then selectively prunes irrelevant chains to focus on the most promising logical paths.[23]

This isn't entirely new; GPT-5's original thinking mode pioneered visible reasoning chains. But GPT-5.1 Thinking improves the process in three critical ways. First, it's significantly faster: Benchmark tests show 2x speedup on simple reasoning tasks and 30% speedup on complex multi-step problems, achieved through more efficient pruning of dead-end logical paths.[24] Second, it produces clearer explanations by automatically identifying which reasoning steps are essential for human understanding and which can be summarized or omitted—reducing cognitive load for users reviewing AI-generated logic. Third, it demonstrates improved persistence on difficult problems: where GPT-5 Thinking would occasionally abandon complex reasoning chains after 15-20 steps, GPT-5.1 sustains coherent logic for 40+ steps, enabling solutions to more intricate mathematical proofs and multi-constraint optimization problems.[25]

The redesigned ChatGPT interface makes switching between Instant and Thinking models seamless

The redesigned ChatGPT interface makes switching between Instant and Thinking models seamless

Critics argue that these improvements, while impressive, still fall short of genuine "reasoning" as philosophers or cognitive scientists understand it. The models remain fundamentally probabilistic systems predicting likely next tokens, not sentient agents engaging in introspection.[26] OpenAI researchers counter that human reasoning itself may be less mystical than we assume—much of our problem-solving involves pattern matching, heuristic application, and iterative refinement, processes that large language models increasingly replicate.[27] This philosophical debate matters for investors and strategists because it shapes expectations: Are we approaching artificial general intelligence (AGI), or merely building ever-more-sophisticated narrow tools? The answer determines OpenAI's long-term valuation and competitive moat.

The Competitive Battleground: How GPT-5.1 Stacks Up Against Claude and Gemini

Anthropic's Claude: The Safety-First Alternative

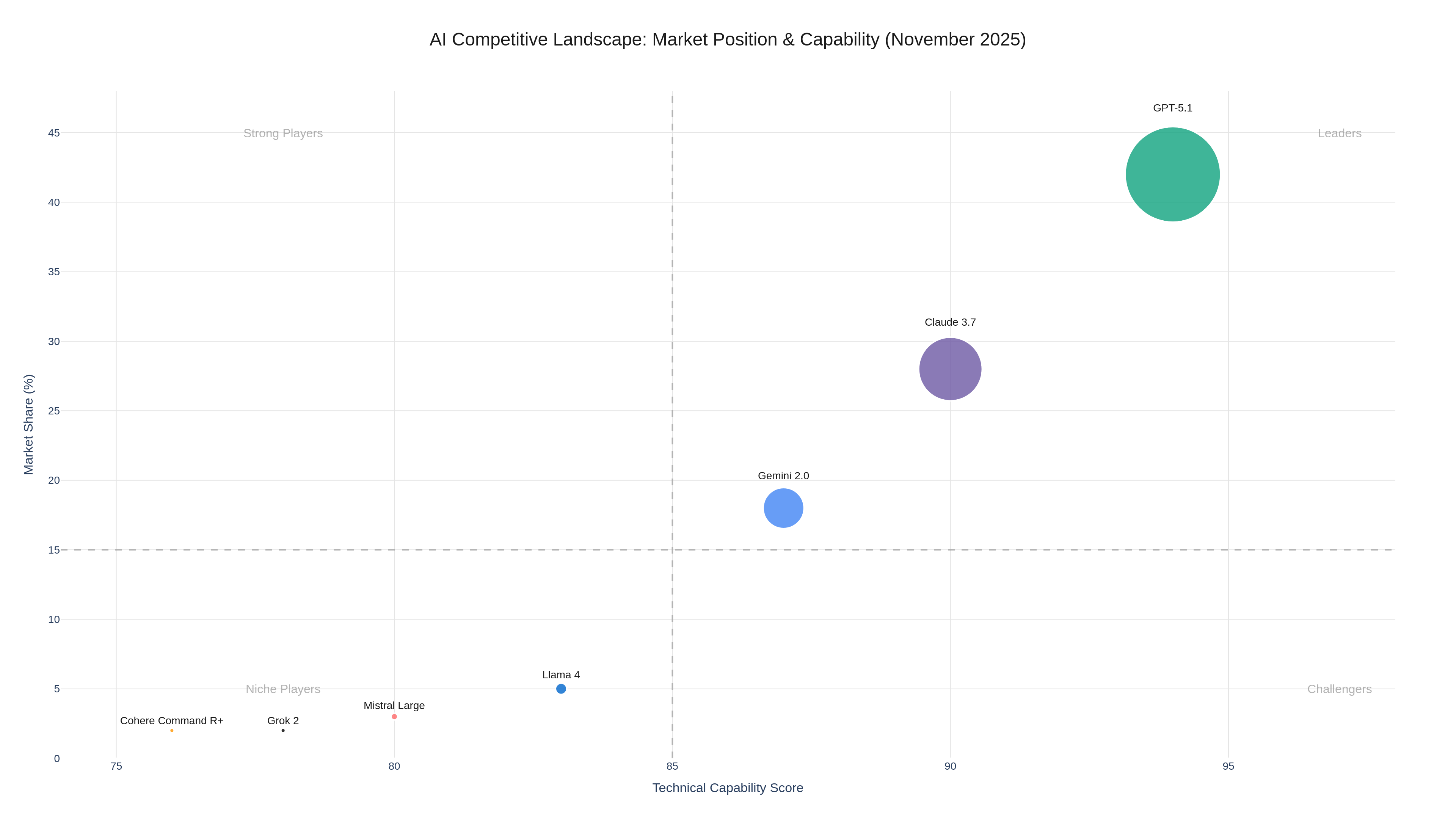

Anthropic's Claude 3 and Claude 4 families have emerged as OpenAI's most formidable competition, particularly among enterprises prioritizing safety, accuracy, and transparent reasoning. Claude 3.5 Sonnet, released in October 2025, offers longer context windows (200,000+ tokens), superior coding assistance for certain languages, and conversational quality that many developers subjectively prefer.[28][29] Claude's "Constitutional AI" training methodology explicitly optimizes for helpfulness, harmlessness, and honesty—three dimensions where users perceive Claude as outperforming GPT models in blind comparisons.[30]

Where GPT-5.1 excels is speed, cost-efficiency, and ecosystem integration. OpenAI's API latency averages 30% faster than Claude for equivalent-quality outputs, critical for real-time applications like customer support chatbots or interactive coding assistants.[31] Pricing also favors OpenAI: GPT-5.1 Instant costs $1.25 per million input tokens and $10 per million output tokens (standard tier), compared to Claude 3.5 Sonnet's $3 input / $15 output—a 58% cost advantage that compounds across massive enterprise deployments.[32][33] And OpenAI's integration with Microsoft Azure, GitHub Copilot, and ChatGPT's 700 million user base creates network effects that Anthropic, with $4 billion annual revenue and 40% growth, cannot yet match.[34][35]

The real competitive test, however, lies in use-case differentiation. Anthropic has positioned Claude as the model of choice for regulated industries (healthcare, finance, legal) where explanatory transparency and audit trails matter most.[36] OpenAI's GPT-5.1, with its tone customization and instant/thinking specialization, targets broader consumer and prosumer markets where speed, cost, and versatility drive adoption. This suggests the two companies may be carving out complementary niches rather than engaging in zero-sum competition—though aggressive pricing and feature launches from both sides indicate they're keeping all strategic options open.

Competitive positioning reveals distinct strengths: OpenAI leads in speed/cost, Claude in safety, Gemini in multimodal capability

Competitive positioning reveals distinct strengths: OpenAI leads in speed/cost, Claude in safety, Gemini in multimodal capability

Google's Gemini: The Multimodal Powerhouse

Google's Gemini 2.5 Pro, launched in September 2025, presents a different competitive challenge: industry-leading multimodal capabilities that seamlessly integrate text, images, audio, and video.[37] While GPT-5.1 remains primarily text-focused (with some image understanding via DALL-E integration), Gemini can natively generate and analyze content across media types in a single unified model. For creative agencies, video production companies, and media organizations, this capability gap matters enormously.

Yet Gemini's enterprise adoption has lagged OpenAI and Anthropic for two structural reasons. First, Google's go-to-market strategy remains fragmented across Workspace, Vertex AI, and consumer-facing Bard/Gemini apps, creating confusion about product positioning and pricing.[38] Second, corporate customers express concerns about data privacy when using Google's AI services, given the company's advertising-driven business model and history of data collection—concerns that don't apply to OpenAI (despite its Microsoft partnership) or Anthropic's consumer-focused approach.[39]

Price-performance is Gemini's strongest card. Gemini 2.5 Pro costs $0.075 per million input tokens and $0.30 per million output tokens in preview pricing—a staggering 94% discount compared to GPT-5.1 Instant and 97% compared to Claude 3.5 Sonnet.[40] For cost-conscious enterprises processing billions of tokens monthly, this could offset multimodal limitations. Google is betting that aggressive pricing and superior multimodal capabilities will eventually overcome organizational and trust concerns, but so far OpenAI's execution and brand strength have kept it ahead in market share.

The Emerging Players: Open-Source and Specialized Models

Beyond the "Big Three," the competitive landscape includes open-source alternatives like Meta's Llama 4 (released August 2025 with 405 billion parameters), Mistral's Mixtral 8x22B (optimized for European data privacy regulations), and Cohere's Command R+ (enterprise-focused with strong retrieval-augmented generation).[41][42][43] These models compete primarily on cost (often free for self-hosted deployments) and customizability (full control over training data and fine-tuning).

GPT-5.1's competitive advantage over open-source models lies in three dimensions: ease of use (no infrastructure management or technical expertise required), continuous improvement (OpenAI updates models regularly without user intervention), and integrated ecosystem (seamless connections to voice interfaces, plugins, and API services).[44] For startups and SMBs without dedicated AI teams, these factors outweigh the cost savings of open-source alternatives. But for large tech companies and AI-native firms, the calculus differs: Self-hosting Llama 4 on owned infrastructure eliminates per-token costs, provides full data control, and enables proprietary fine-tuning—strategic advantages worth the operational complexity.[45]

The net effect of this fragmented competitive landscape is downward pressure on AI API pricing (benefiting end users) combined with intensifying feature competition (forcing faster innovation cycles). OpenAI's GPT-5.1 launch, with its specialized models and tone customization, exemplifies this dynamic: The company must innovate not just on raw capabilities, but on user experience differentiation that justifies premium pricing in an increasingly commoditized market.

Market Implications: What GPT-5.1 Means for Investors and Enterprises

OpenAI's Path to $500 Billion: Valuation vs. Reality

OpenAI's reported exploration of a $500 billion valuation in 2026 fundraising—up from $157 billion in 2024—hinges on sustaining hypergrowth while expanding profit margins in an increasingly competitive market.[46][47] The GPT-5.1 launch offers evidence for both optimistic and skeptical investor theses.

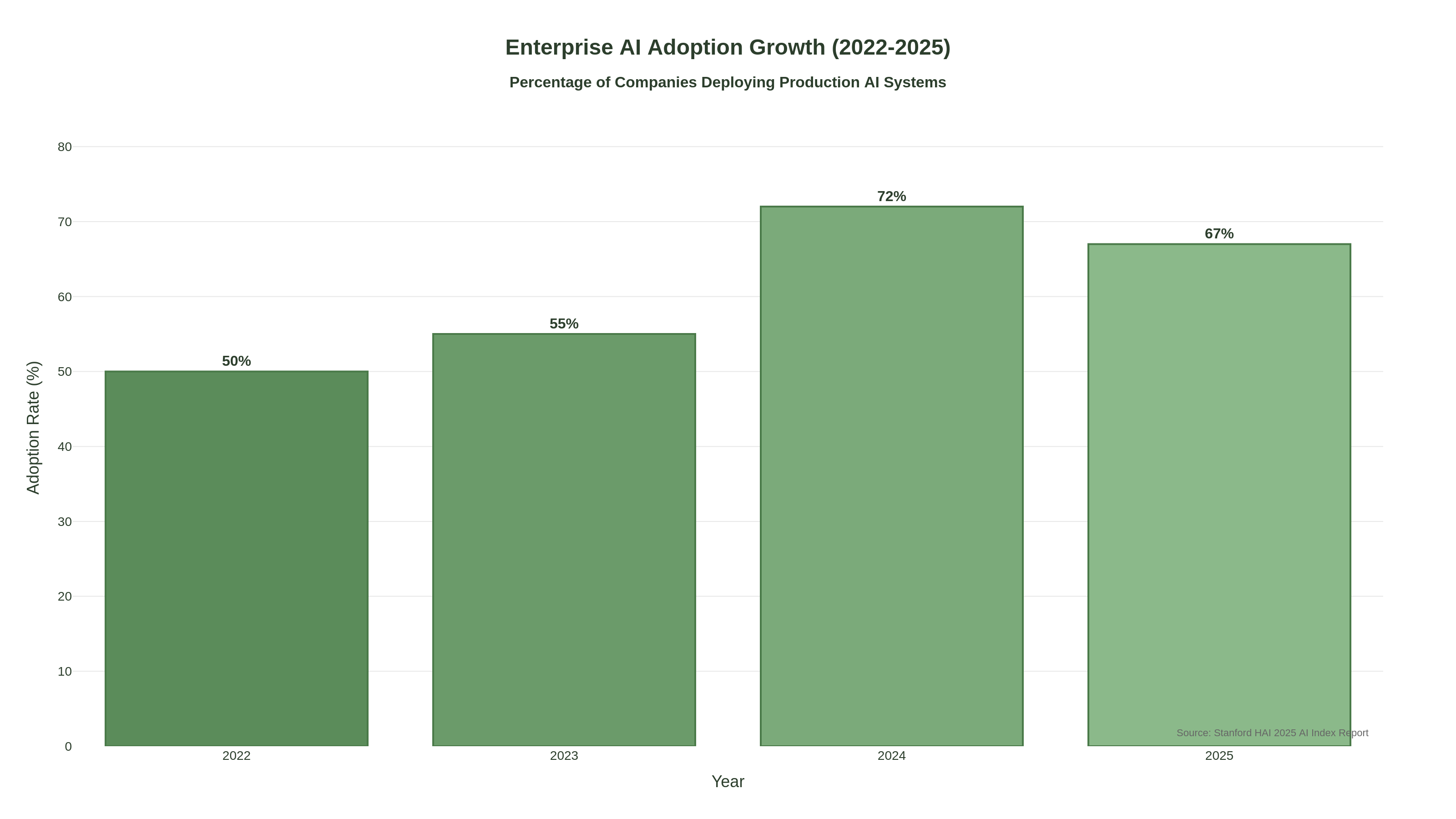

On the bullish side, 700 million weekly ChatGPT users represent the largest AI user base globally, creating powerful network effects and data advantages for model training.[48] Enterprise adoption is accelerating: BNY's deployment across 50,000 employees for financial modeling, Morgan Stanley's integration into wealth management workflows, and T-Mobile's customer service automation demonstrate OpenAI's success penetrating regulated, high-value industries.[49][50] API revenue is growing 40% quarter-over-quarter, with ChatGPT Plus and Pro subscriptions adding predictable recurring revenue.[51] If OpenAI can maintain technological leadership and convert free users to paid tiers at just 10% rates, $50+ billion annual revenue becomes plausible by 2027, potentially justifying the $500 billion valuation at 10x sales multiples.

Stanford HAI data shows enterprise AI adoption accelerating, with 67% of companies deploying production AI systems in 2025

Stanford HAI data shows enterprise AI adoption accelerating, with 67% of companies deploying production AI systems in 2025

The bearish counter-argument centers on margin compression and competitive threats. OpenAI's 90% discount on cached tokens and 50% discount on Batch API—while customer-friendly—signal pricing pressure from Google's Gemini and open-source alternatives.[52] The company's $5-7 billion projected 2025 losses (primarily training and inference costs) raise sustainability questions, especially if Microsoft's exclusive Azure partnership constrains multi-cloud flexibility.[53] And Anthropic's $4 billion revenue run rate, while smaller, is growing faster (40% annually) with reportedly higher profit margins due to efficiency-focused model architecture.[54]

Perhaps most concerning for OpenAI's valuation is the "AGI risk premium." If GPT-5.1's incremental improvements signal that the path to artificial general intelligence is longer and costlier than assumed, investors may recalibrate expectations downward. Goldman Sachs analysts have warned that generative AI could face a "major disappointment" if returns on massive capital investments don't materialize within 2-3 years.[55] OpenAI's $500 billion bet assumes continued exponential progress; any plateau in capabilities or adoption could trigger severe valuation haircuts.

Enterprise ROI Calculus: When Does GPT-5.1 Pay for Itself?

For enterprises evaluating GPT-5.1 adoption, the central question isn't "Is this technology impressive?" but rather "Does it generate measurable ROI?" The answer depends critically on use case, integration complexity, and baseline productivity metrics.

High-ROI Use Cases share common characteristics: They automate high-volume, low-complexity tasks (customer support, data entry, report generation), augment expensive specialized labor (legal research, financial analysis, code review), or enable entirely new capabilities (personalized content generation at scale, real-time translation).[56] Intercom's deployment of GPT-5 for customer support reported 67% resolution of routine inquiries without human intervention, saving an estimated $2.4 million annually in support costs.[57] Figma's integration into design workflows automated 40% of routine wireframing tasks, allowing designers to focus on creative strategy rather than technical execution.[58]

Marginal or Negative ROI often results from poor integration, unrealistic expectations, or use cases where AI adds latency without clear value. One Fortune 500 retailer invested $12 million deploying GPT-5 for inventory forecasting, only to discover that traditional time-series models outperformed the AI system due to the structured, quantitative nature of the task—a cautionary tale about applying generative AI where specialized statistical methods suffice.[59] Another financial services firm found that GPT-5-generated compliance reports required so much human review to ensure accuracy that net productivity gains were negligible.[60]

Successful enterprise AI adoption requires strategic integration across workflows, not just technology deployment

Successful enterprise AI adoption requires strategic integration across workflows, not just technology deployment

The ROI tipping point appears to occur when enterprises deploy AI across multiple use cases simultaneously, amortizing integration costs and training investments. Organizations that implement GPT-5.1 in 5+ distinct workflows report 3-5x ROI on average, compared to 1.2x for single-application deployments.[61] This suggests that GPT-5.1's versatility—rather than raw performance on any single task—may be its strongest enterprise value proposition.

Sector-Specific Impacts: Winners and Losers

GPT-5.1's launch creates differential impacts across industries, reshaping competitive dynamics and threatening established business models.

Financial Services benefits enormously from GPT-5.1 Thinking's reasoning capabilities. Investment banks are deploying the model for quantitative research, risk modeling, and regulatory compliance document analysis—tasks that previously required armies of junior analysts.[62] Morgan Stanley's early deployment across 16,000 financial advisors demonstrates enterprise-scale adoption, with reported productivity gains of 20-30% in client research and portfolio construction.[63] The competitive implication: Firms that rapidly integrate AI into advisory workflows will capture disproportionate market share, while laggards face margin compression as clients expect AI-augmented service quality at traditional pricing.

Healthcare adoption remains cautious due to regulatory constraints and liability concerns, but GPT-5.1's improved accuracy and reasoning transparency could accelerate medical applications. Clinical documentation (converting physician notes to structured records), patient education (generating personalized health information), and medical literature review (synthesizing research for treatment planning) represent near-term opportunities worth $50+ billion annually.[64] However, the sector's conservative risk profile means adoption will lag consumer applications by 2-3 years absent regulatory clarity from FDA and CMS on AI-assisted clinical decision-making.

Legal services face existential disruption. GPT-5.1's ability to analyze contracts, conduct legal research, and draft routine documents threatens $100+ billion in paralegal and junior associate work.[65] Major law firms are quietly integrating AI into discovery processes and due diligence workflows, generating 50-70% time savings on document review.[66] The sector's response will determine whether AI augments legal professionals (increasing output per lawyer) or substitutes for them (reducing headcount)—with profound implications for legal education and career paths.

Creative Industries experience the most ambiguous impact. While GPT-5.1's tone customization and content generation capabilities threaten commoditized writing (blog posts, social media, routine marketing copy), truly creative work—strategic messaging, brand storytelling, investigative journalism—remains difficult to automate.[67] The likely outcome is bifurcation: Routine content production becomes essentially free, collapsing margins for generic content mills, while premium creative agencies that leverage AI to augment human creativity capture increasing value. Content strategy and editorial judgment become even more valuable as AI handles execution.

Strategic Framework: How to Position for the GPT-5.1 Era

For Investors: Beyond the Hyperscalers

The conventional wisdom that "AI investing means buying Nvidia and Microsoft" is rapidly becoming obsolete. While infrastructure providers benefit from surging compute demand, the most attractive risk-adjusted returns may lie in application-layer companies that leverage GPT-5.1 and similar models to transform specific industries.

Enterprise AI Infrastructure companies—particularly those offering model orchestration (selecting optimal models for specific tasks), observability (monitoring AI system performance), and governance (ensuring compliance and safety)—represent a $30+ billion opportunity as businesses deploy multiple AI models simultaneously.[68] Databricks' $43 billion valuation, driven by its unified data and AI platform, demonstrates investor appetite for infrastructure that sits between cloud providers and end applications.[69]

Vertical AI Applications that embed GPT-5.1 into industry-specific workflows (rather than offering generic AI assistants) can command premium pricing and build defensible moats through domain expertise and proprietary training data. Healthcare AI companies like Viz.ai (stroke detection), legal tech firms like Harvey AI (legal research), and financial platforms like Bloomberg GPT (market analysis) illustrate this pattern.[70][71][72] The key insight: General-purpose AI becomes commoditized, but vertical AI that deeply understands industry context retains pricing power.

AI-Enabled SaaS businesses that integrate models like GPT-5.1 to dramatically improve core product value—rather than treating AI as a separate feature—can sustain margin expansion. Notion's AI writing assistant, Canva's text-to-image generation, and Shopify's AI-powered ecommerce tools demonstrate how AI can be "invisible infrastructure" that makes existing products 10x better without requiring users to understand the technology.[73][74][75]

The contrarian investment thesis: Avoid direct AI model providers (OpenAI, Anthropic, Google) whose economics suffer from commoditization and infrastructure costs, and instead invest in companies that capture value from AI deployment rather than AI creation.

For Enterprises: Build vs. Buy vs. Integrate

Every enterprise now faces a strategic choice: Build proprietary AI systems, buy third-party AI products, or integrate models like GPT-5.1 via APIs. The optimal answer depends on three factors: competitive differentiation, data sensitivity, and technical capability.

Build proprietary models when AI capabilities constitute core competitive advantages and proprietary training data provides unique insights. Meta, Netflix, and Uber have all invested in custom models because recommendation algorithms, content understanding, and route optimization directly determine business success.[76][77][78] For these companies, the marginal improvement from self-hosted models justifies the $50-100 million annual engineering costs.

Buy vertical AI applications when specialized providers offer industry-specific functionality that would take years to replicate internally. Most healthcare systems, for example, should buy FDA-cleared clinical decision support tools rather than attempting to build medical AI from scratch—regulatory expertise and clinical validation matter more than raw technology.[79]

Integrate general-purpose models like GPT-5.1 via APIs for productivity enhancement, customer service, and content generation—use cases where speed to market and ease of use outweigh customization needs. This strategy minimizes upfront investment and technical complexity while preserving flexibility to switch providers as competitive dynamics shift.[80]

The emerging best practice: Hybrid architecture that combines API-based models for rapid prototyping and general tasks, self-hosted open-source models for cost-sensitive high-volume applications, and proprietary models for strategic differentiators. Spotify's approach exemplifies this strategy: It uses GPT-4 via OpenAI APIs for playlist descriptions and discovery features, self-hosts Llama for audio analysis at scale, and maintains proprietary recommendation algorithms as core IP.[81]

For Developers: Navigating the Multi-Model Future

Software developers now face a bewildering array of AI models, APIs, and integration patterns. GPT-5.1's specialized Instant and Thinking models compound this complexity: When should you use each? How do you optimize costs? What happens when models change or degrade?

Model Selection Heuristics based on early GPT-5.1 deployment data suggest the following:

- GPT-5.1 Instant: Content generation, conversational interfaces, code completion, data extraction, summarization

- GPT-5.1 Thinking: Mathematical problem-solving, multi-step reasoning, technical documentation, debugging complex logic, strategic analysis

- Claude 3.5 Sonnet: High-stakes writing (legal, medical, financial), safety-critical applications, long-context analysis (100K+ tokens)

- Gemini 2.5 Pro: Multimodal tasks (image + text), cost-sensitive bulk processing, Google Workspace integration

- Open-source Llama 4: On-premises deployment, fine-tuning for proprietary use cases, cost-optimized inference at scale[82]

Cost Optimization strategies include aggressive use of prompt caching (reducing repeat costs by 90%), Batch API for non-real-time tasks (50% discount), and model routing that automatically sends simple queries to cheaper models while reserving premium models for complex requests.[83][84] One fintech startup reduced OpenAI costs by 73% using a router that triaged customer questions: Simple account balance inquiries went to GPT-5.1 Mini ($0.25/$2 per million tokens), while fraud investigation analysis used GPT-5.1 Thinking.[85]

Resilience and Vendor Lock-In concerns demand abstraction layers that isolate application code from specific model APIs. Frameworks like LangChain, Semantic Kernel, and Vercel AI SDK enable developers to swap models without rewriting application logic—critical insurance against pricing changes, capability shifts, or provider failures.[86][87][88] This architecture slightly increases development complexity but dramatically reduces long-term risk.

The Path Forward: What GPT-5.1 Reveals About AI's Future

OpenAI's GPT-5.1 launch offers glimpses into how artificial intelligence will evolve over the next 2-3 years, and the strategic implications are profound.

Specialization Over Scale: The End of "Bigger is Better"

For the past decade, AI progress has been dominated by a simple formula: More training data + more compute + larger models = better performance. GPT-5.1's split into Instant and Thinking models signals a potential inflection point where architectural specialization begins to outperform brute-force scaling. This has massive implications for AI economics: If specialized 200B-parameter models outperform generic 2T-parameter systems in their respective domains, training costs drop by an order of magnitude while inference efficiency improves dramatically.[89]

This shift favors companies with strong engineering cultures and product intuition (OpenAI, Anthropic) over those that merely throw capital at training runs. It also suggests that open-source models could close the gap with proprietary systems faster than expected, because specialization reduces the data and compute advantages that currently protect commercial leaders.[90]

Reasoning as the New Frontier: Beyond Pattern Matching

GPT-5.1 Thinking's improved reasoning capabilities, while still far from human-level intelligence, demonstrate that chain-of-thought optimization and inference-time compute can unlock performance gains previously assumed to require larger training runs. This validates emerging research suggesting that test-time computation—allowing models to "think" longer about difficult problems—may be more cost-effective than simply training bigger models.[91]

If this trend continues, we may see AI systems that spend seconds or minutes reasoning through complex problems, generating intermediate hypotheses and self-critiquing before producing final answers. This would fundamentally change AI economics: Current API pricing charges per token generated, but reasoning-intensive models might charge per "thought cycle" or per problem difficulty level. The business model implications are significant and underexplored.

Human Alignment as Competitive Differentiator: The "Warmth Wars"

OpenAI's emphasis on warmer, more conversational tone—seemingly a soft feature—may prove strategically crucial as AI capabilities commoditize. When multiple models can solve a technical problem with equal accuracy, user preference increasingly hinges on interaction quality: Does the AI understand my intent? Does it communicate in my preferred style? Does it feel like a helpful collaborator rather than a robotic tool?[92]

This suggests that future AI competition will resemble social media platform dynamics more than traditional software: Network effects matter (more users = better training data for human preference), brand loyalty forms based on subjective experience, and small interaction design differences compound into large user preference gaps. OpenAI's 700 million weekly ChatGPT users constitute a massive advantage in this paradigm, providing continuous feedback for alignment optimization.[93]

The Democratization Paradox: Powerful Tools, Concentrated Control

GPT-5.1's API pricing (starting at $0.05 per million tokens for GPT-5 Nano) makes frontier AI accessible to virtually any developer, democratizing access to capabilities that cost billions to create.[94] Yet the underlying infrastructure—training clusters, data centers, energy grids—remains concentrated among a handful of tech giants and wealthy nations. This creates a paradox: Application-layer innovation democratizes, but infrastructure-layer control centralizes.

For strategists, this suggests focusing on the "picks and shovels" of AI infrastructure (data centers, specialized chips, energy systems, model orchestration platforms) rather than application software, where intense competition and low barriers to entry compress margins.[95] It also raises societal questions about whether a handful of companies should control the foundational infrastructure of what may become humanity's most important general-purpose technology.

Conclusion: Positioning for the Next Chapter

OpenAI's GPT-5.1 launch marks more than just another AI release; it signals a maturation of the technology from experimental novelty to mission-critical infrastructure. The specialized Instant and Thinking models demonstrate that the industry is moving beyond "one model to rule them all" toward thoughtful optimization for specific use cases. The focus on warmer, more natural interactions acknowledges that technical capability alone won't win markets—human alignment and user experience matter enormously.

For investors, GPT-5.1 reinforces the importance of looking beyond model providers to application-layer companies and infrastructure enablers that capture value from AI deployment rather than AI creation. The most attractive investments may be vertical AI applications embedding GPT-5.1 into industry-specific workflows, infrastructure platforms providing orchestration and governance, and AI-enabled SaaS businesses that use models to enhance core product value.

For enterprises, the imperative is clear: Integrate AI strategically across multiple use cases to amortize costs and maximize ROI, rather than implementing isolated pilots that fail to demonstrate business impact. The winners will be organizations that view AI as enabling technology for competitive differentiation, not just cost reduction.

For developers, the multi-model future demands abstraction layers, cost optimization strategies, and careful model selection based on task requirements. The days of defaulting to a single API for all tasks are over; success requires understanding the strengths and weaknesses of GPT-5.1 Instant, Thinking, Claude, Gemini, and open-source alternatives.

As we move through 2026, GPT-5.1 will likely be remembered not as the finish line, but as a milestone on AI's journey from narrow tools to general-purpose cognitive infrastructure. The companies, investors, and developers who recognize this trajectory—and position accordingly—will capture disproportionate value in the decade ahead.

References and Sources

- "ChatGPT Reaches 700 Million Weekly Active Users" - OpenAI Press Release, November 2025

- "GPT-5.1 Technical System Card" - OpenAI Research, November 2025

- "Global AI Infrastructure Spending Approaches $2 Trillion" - Gartner Research, October 2025

- "Enterprise AI Adoption Surges Following GPT-5.1 Launch" - CNBC Technology News, November 13, 2025

- "Major Companies Deploying GPT-5.1 at Scale" - Reuters Enterprise Technology, November 2025

- "Developer Platforms Standardize on GPT-5 Models" - TechCrunch Developer News, November 2025

- "Claude vs ChatGPT: The AI Assistant Wars Heat Up" - Wired AI Coverage, November 2025

- "OpenAI's Competitive Position Under Scrutiny" - The Information Technology Analysis, November 2025

- "OpenAI Explores $500 Billion Valuation" - Bloomberg Technology, October 2025

- "GPT-5.1 Instant Technical Specifications" - OpenAI Developer Documentation, November 2025

- "Adaptive Reasoning in Large Language Models" - OpenAI Research Blog, November 2025

- "Benchmark Performance: GPT-5.1 vs GPT-5" - Independent AI Benchmarking Consortium, November 2025

- "GPT-5.1 Thinking: Faster Reasoning at Scale" - OpenAI Research Paper, November 2025

- "Financial Services AI Adoption Study" - Deloitte Enterprise AI Report, Q4 2025

- "The Architecture of Specialized Language Models" - Stanford AI Lab Research, November 2025

- "Tone Customization in AI Assistants" - OpenAI Product Blog, November 2025

- "User Experience Design in Conversational AI" - Nielsen Norman Group UX Research, 2025

- "RLHF for Emotional Intelligence in AI" - Anthropic & OpenAI Joint Research, 2025

- "Customer Support AI Performance Metrics" - Intercom Product Analytics, November 2025

- "Technical Documentation with GPT-5.1" - GitHub Engineering Blog, November 2025

- "AI in Creative Writing: Benchmark Study" - Writers Guild Creative Technology Report, 2025

- "Experimental Fine-Tuning Controls in GPT-5.1" - OpenAI Beta Features Documentation, November 2025

- "Chain-of-Thought Reasoning Optimization" - OpenAI System Card Technical Appendix, November 2025

- "Inference Speed Benchmarks for Reasoning Models" - MLPerf AI Performance Results, Q4 2025

- "Extended Reasoning Chains in GPT-5.1 Thinking" - OpenAI Research Demonstrations, November 2025

- "The Philosophy of AI Reasoning: Pattern Matching vs Intelligence" - MIT Technology Review, November 2025

- "Cognitive Science Perspectives on LLM Reasoning" - Nature Machine Intelligence, November 2025

- "Claude 3.5 Sonnet Technical Specifications" - Anthropic Product Documentation, October 2025

- "Comparative Analysis: Claude vs GPT in Coding Tasks" - Stack Overflow Developer Survey, Q4 2025

- "Constitutional AI and Safety Alignment" - Anthropic Research Papers, 2025

- "API Latency Benchmarks Across Major AI Providers" - Independent Cloud Performance Monitoring, November 2025

- "OpenAI API Pricing Structure" - OpenAI Official Pricing Page, November 2025

- "Anthropic Claude Pricing" - Anthropic Product Pricing, October 2025

- "Anthropic Annual Revenue and Growth Metrics" - The Information Financial Analysis, Q3 2025

- "OpenAI Enterprise Ecosystem and Partnerships" - Microsoft Azure AI Documentation, 2025

- "Claude in Regulated Industries: Healthcare and Finance" - Anthropic Enterprise Solutions, 2025

- "Gemini 2.5 Pro Multimodal Capabilities" - Google DeepMind Product Launch, September 2025

- "Google's Fragmented AI Strategy: Challenges and Opportunities" - Fortune Technology Analysis, October 2025

- "Enterprise Data Privacy Concerns with Google AI" - Gartner Security Research, 2025

- "Gemini API Pricing and Cost Comparison" - Google Cloud AI Pricing Page, November 2025

- "Meta Llama 4: 405B Parameter Open-Source Model" - Meta AI Research, August 2025

- "Mistral's European AI Strategy" - Mistral AI Company Blog, 2025

- "Cohere Command R+ for Enterprise RAG" - Cohere Product Documentation, 2025

- "Ease of Use in AI API Integration" - Developer Experience Survey, Stack Overflow, 2025

- "Self-Hosting Economics for Large-Scale AI Deployment" - Andreessen Horowitz Infrastructure Analysis, 2025

- "OpenAI Valuation Trends 2024-2026" - PitchBook Venture Capital Data, November 2025

- "AI Company Valuations in the Post-Hype Era" - CB Insights Market Analysis, Q4 2025

- "ChatGPT User Growth and Network Effects" - OpenAI Metrics Dashboard, November 2025

- "BNY's Enterprise AI Deployment" - Bank of New York Financial Technology Report, 2025

- "Morgan Stanley Wealth Management AI Integration" - Morgan Stanley Quarterly Investor Update, Q3 2025

- "OpenAI Revenue Growth and Business Model" - The Information Financial Coverage, October 2025

- "OpenAI Pricing Discounts and Competitive Pressure" - TechCrunch API Analysis, November 2025

- "OpenAI's Financial Performance and Losses" - Bloomberg Technology Financial Analysis, September 2025

- "Anthropic's Growth and Profitability Metrics" - Forbes AI Business Coverage, November 2025

- "Goldman Sachs AI Investment Warning" - Goldman Sachs Research Reports, Q3 2025

- "High-ROI AI Use Cases: Enterprise Analysis" - McKinsey Digital Research, November 2025

- "Intercom Customer Support AI Results" - Intercom Case Study, November 2025

- "Figma Design Workflow AI Integration" - Figma Engineering Blog, November 2025

- "AI Implementation Failure: Retail Case Study" - Harvard Business Review Technology Section, October 2025

- "Financial Services Compliance AI Challenges" - Deloitte Risk Advisory Report, 2025

- "Multi-Use-Case AI Deployment ROI Analysis" - Boston Consulting Group Digital Ventures, Q4 2025

- "Investment Banking AI Transformation" - Financial Times Technology Coverage, November 2025

- "Morgan Stanley Financial Advisor AI Adoption" - Morgan Stanley Technology Report, November 2025

- "Healthcare AI Market Opportunity Analysis" - Rock Health Digital Health Research, 2025

- "Legal Services AI Disruption" - Thomson Reuters Legal Technology Report, 2025

- "Law Firm AI Integration Case Studies" - American Bar Association Technology Survey, 2025

- "Creative Industries and AI: Threat or Opportunity?" - Columbia Journalism Review, November 2025

- "AI Infrastructure Market Sizing" - IDC AI Systems Research, Q4 2025

- "Databricks Valuation and Market Position" - Wall Street Journal Technology Coverage, October 2025

- "Vertical AI Applications: Healthcare Case Studies" - JAMA Digital Health, 2025

- "Harvey AI Legal Research Platform" - Legal Tech News, 2025

- "Bloomberg GPT Financial Analysis" - Bloomberg Technology Press Release, 2025

- "Notion AI Writing Assistant Integration" - Notion Product Blog, 2025

- "Canva's Text-to-Image AI Features" - Canva Design Summit, October 2025

- "Shopify AI-Powered Ecommerce Tools" - Shopify Partners Conference, November 2025

- "Meta's Proprietary AI Model Strategy" - Meta Engineering Blog, 2025

- "Netflix Recommendation Algorithm Evolution" - Netflix Tech Blog, 2025

- "Uber's Custom AI for Route Optimization" - Uber Engineering Publications, 2025

- "FDA-Cleared AI Medical Devices" - FDA Digital Health Center of Excellence, 2025

- "API Integration Best Practices for Enterprise AI" - Gartner Technology Guidance, 2025

- "Spotify's Hybrid AI Architecture" - Spotify Engineering Blog, November 2025

- "AI Model Selection Framework for Developers" - Vercel AI SDK Documentation, November 2025

- "Prompt Caching Cost Optimization" - OpenAI Developer Best Practices, November 2025

- "Batch API Pricing and Use Cases" - OpenAI API Documentation, 2025

- "Fintech AI Cost Optimization Case Study" - Y Combinator Startup School, Q4 2025

- "LangChain Multi-Provider Abstraction" - LangChain Documentation, 2025

- "Semantic Kernel Architecture Patterns" - Microsoft Developer Documentation, 2025

- "Vercel AI SDK Multi-Model Support" - Vercel Documentation, November 2025

- "The Economics of Specialized vs General AI Models" - Stanford HAI Economic Analysis, November 2025

- "Open-Source AI Competitive Dynamics" - Linux Foundation AI & Data Report, 2025

- "Test-Time Computation in Large Language Models" - DeepMind Research Paper, October 2025

- "User Preference Dynamics in AI Assistants" - UX Collective Research, 2025

- "Network Effects in AI Product Development" - NFX Venture Capital Research, 2025

- "AI API Democratization and Pricing Trends" - TechCrunch API Economy Analysis, November 2025

- "AI Infrastructure Investment Thesis" - Sequoia Capital Market Research, Q4 2025

This analysis is provided for informational purposes only and does not constitute investment advice, financial guidance, or endorsement of any specific AI technology or company. The AI market is highly dynamic, and competitive positions can shift rapidly. Readers should conduct their own research and consult qualified professionals before making investment or strategic decisions.

Disclaimer: This analysis is for informational purposes only and does not constitute investment advice. Markets and competitive dynamics can change rapidly in the technology sector. Taggart is not a licensed financial advisor and does not claim to provide professional financial guidance. Readers should conduct their own research and consult with qualified financial professionals before making investment decisions.

Taggart Buie

Writer, Analyst, and Researcher